Let’s jump right in at the only place we can: the very begining. The programs we write every day are based on orchestrated algorithms and data structures that all have their roots in a single thing: LOGIC. Let’s quickly explore some basic logical rules as we’re going to build on them later on.

Logic… What Exactly Is It?

The first, most obvious is question is how are we defining the term “logic”. There are a few different definitions so let’s start with the first, offered by Aristotle. In trying to come up with a framework for thinking, Aristotle described what are known today as The Laws of Thought. Let’s take a look at each one using JavaScript!

The first is The Law of Identity, which simply states that a bit of logic is whole unto itself - it’s either true, or false, and it will always be equal to itself.

Here we’re describing this as x === x, which… yes… I know seems perfectly obvious but stay with me.

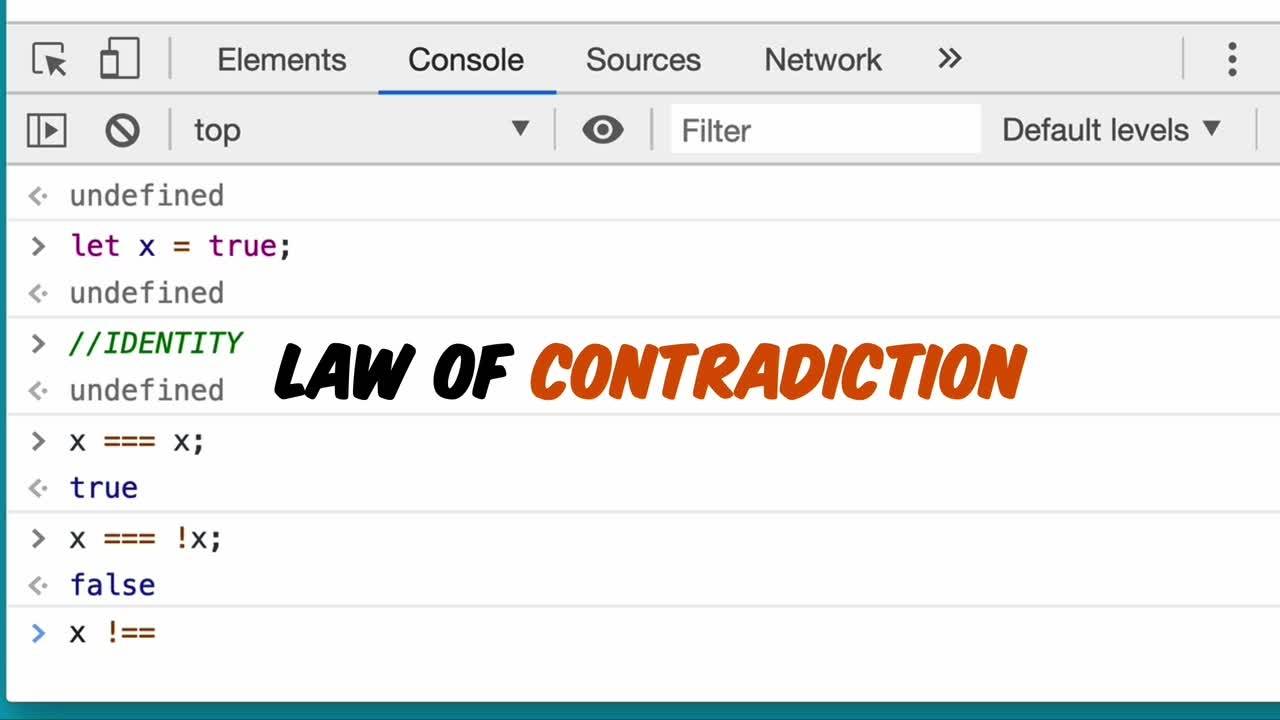

The next law is called The Law of Contradiction, which states that a logical statement cannot be both true and false at the same time. Put another way - a true statement is never false, and a false statement is never true.

Again - this is perfectly reasonable and seems obvious. Let’s keep going with the third law: Excluded Middle.

This one’s a bit more fun as it states that something can either be true or false - there is no in-between. Using JavaScript we can demonstrate this by setting x and y to true and false and playing around with different operations - the only thing that is returned, based on those operations, is true or false - that’s Excluded Middle in action.

And right about now some of you might be bristling at this.

Ternary Logic

As I’ve been writing the statements above we’ve been seeing JavaScript evaluate the result of each, which has been undefined. The idea here is that there’s a third state, that’s neither true nor false - which is undefined. You can also think of this as null for now even though, yes, null and undefined are two different things. We’ll lump them together for the sake of defining what’s known as “ternary logic” - or “three state logic” which kicks Excluded Middle right in the teeth.

Problems

Aristotle had a problem with his logical system - it only deals with things that are known. The only things we can know for sure are things that have happened already and that we have witnessed somehow… even then there’s a question of whether we truly know them. Let’s sidestep that rabbit hole.

When asked to apply his Laws of Thought to future events - such as “will Greece be invaded this year” Aristotle replied that logic cannot apply to future events as they are unknowable. An easy out, and also a lovely transition to Ternary Logic.

Determininism

Let’s bring this back to programming. You and I can muse all day about whether Aristotle’s brand of logic - which we can call “binary” for now - is more applicable or whether the world can be better understood with the more flexible ternary logic. But I don’t want to do that because I’m here to talk about computer programming and for that there’s only one system that we can think about - a deterministic system.

If you read the first Imposter’s Handbook you’ll likely remember the chapter on determinism (and non-determinism). If not - a simple explanation is that a deterministic system means that every cause has an effect and there is no unknown.

Programs are deterministic because computers are deterministic. Every instruction that a computer is given is in the form of groups of 1s and 0s… there are no undefined middle points. This is important to understand as we move forward - the math that we’re about to get into and the very advent of computer science is predicated on these ideas. I know what you’re thinking though…

What About Null, None, Nil or Undefined?

Programming languages define much more than simply true or false - they also include the ability to have something be neither in the forms of null, nil, none or undefined. So let me ask you a question… is that logical?

Let’s take a look.

By default, JavaScript (and many other languages) will default a variable to an unknown value such as null or, as you see here, undefined. If I ask JavaScript if something undefined is equal to itself, the answer is true. If it’s not equal to itself the answer is false - so good so far.

What about being equal to not-not itself? Well that’s false as well which makes a bit of distorted sense because !y is false so !!y returns true… I guess. But if something is !undefined … what is it? To JavaScript… it’s simply true.

We can short-circuit our brains thinking about this but let’s not…

The Billion Dollar Blunder

The creator of ALGOL, Tony Hoare, is credited with creating the concept of null in a programming language:

I call it my billion-dollar mistake…At that time, I was designing the first comprehensive type system for references in an object-oriented language. My goal was to ensure that all use of references should be absolutely safe, with checking performed automatically by the compiler. But I couldn’t resist the temptation to put in a null reference, simply because it was so easy to implement. This has led to innumerable errors, vulnerabilities, and system crashes, which have probably caused a billion dollars of pain and damage in the last forty years.

Have you ever battled null problems in your code or tried to coerce an undefined value into some kind of truthy statement? We all do that every day.

Computers aren’t capable of understanding this. Programming languages are, apparently and at some point the two need to reconcile what null and undefined means. What makes this worse is that different languages behave differently.

Ruby

Ruby defines null as nil and has a formalized class, called NilClass for dealing with this unknowable value. If you try to involve nil in a logical statement, such as greater or less than 10, Ruby will throw an exception. This makes a kind of sense, I suppose, as comparing something unknown can be … anything really.

But what about coercion? As you can see here, nil will be evaluated to false and asking nil if it’s indeed nil will return true. But you can also convert nil to an array or an integer… which seems weird… and if you inspect nil you get a string back that says “nil”. We’ll just leave off there.

JavaScript

JavaScript is kind of a mess when it comes to handling null operations as it will try to do it’s best to give you some kind of answer without throwing an exception. 10 _ null is 0, for instance… I dunno…

It’s the last two statements that will bend your brain, however, because 10 <= null is somehow false… but 10 >= 0 is true. I know JavaScript fans out there will readily have an answer… good for them I’m sure there’s a way to explain this but honestly it’s not sensical to begin with because, as I’ve mentioned, null and undefined are abstractions on top of purely logical systems. Each language gets to invent it’s own rules.

If you ask JavaScript what type null is you’ll get object back - which isn’t true, as MDN states:

In the first implementation of JavaScript, JavaScript values were represented as a type tag and a value. The type tag for objects was 0. null was represented as the NULL pointer (0x00 in most platforms). Consequently, null had 0 as type tag, hence the bogus typeof return value.

C#

Let’s take a look at a more “structured” language - C#. You would think that a language like this would be a bit more strict about what you can do with Null… but it’s not! OK it DOES throw when you try to compare null to !!null - that’s a good thing, but when you try to do numeric comparisons… hmmm

And null + 10 is null? I dunno about that.

The Point

So, what’s my point with this small dive into the world of logic and null? It is simply that null is an abstraction defined by programming languages. It (as well as undefined) has no place in the theory we’re about to dive into. We’re about to jump into the land of pure logic and mathematics, electronic switches that become digital… encoding… encryption and a bunch more - none of which have the idea of null or undefined.

It’s exciting stuff - let’s go!